The Past, Present, and Future of AI

Interviewee, photo courtesy/Dennis Ong

In 1962, the cartoon "The Jetsons" (also translated as "Modern Family") brought the lifestyle imagined a hundred years later into the living space of Americans at that time - press a button and food will be delivered to your door; Facing the "computer", talking to distant family members and conducting work meetings; the "robot" becomes a house helper, taking care of cleaning and cooking...

Over the past 60 years, most of the technologies imagined in the "past" have become progressive in the "present". So, will self-driving flying cars, "humanoid" robots, etc. also appear in the foreseeable "future"?

The application of AI artificial intelligence is gradually becoming more and more widespread. Since the beginning of 2023, ChatGPT (Generative Pre-trained Transformer) has become a hot topic.

Do new tools bring convenience, or do they hide unknowable consequences? Amid the turmoil of technology, can you and I, as citizens of the Kingdom of Heaven, lead the trend?

This magazine has an exclusive interview with Dr. Wang Hao (hereinafter referred to as D) who serves as a senior executive at Amazon Web Services. His current focus is on assisting multinational companies to promote business innovation through artificial intelligence, digitalization and cloud transformation. Wang Hao was the director of 5G artificial intelligence research and development at Verizon Communications Company, and worked with Honda to demonstrate the world's first 5G self-driving car. He also obtained three patents related to artificial intelligence/machine learning in 2022.

Past development of AI artificial intelligence

KRC: Please explain Artificial Intelligence (AI) and Machine Learning (ML). Please briefly describe the development of AI so far.

D:To put it simply, AI is using machines to do things that humans can do. Focused research began in the 1940s and proposed the use of neural networks, which are inspired by the human brain and teach computers to learn. We have the theory, but not enough data, and we still don’t know how to actually create a neural network.

Expert Systems have been used since the 1980s. For example, data on how experts in the medical field diagnose and treat are put into computers. However, it is difficult to create and maintain; information can easily become outdated and inapplicable, and it must be updated as new information appears, which is time-consuming and labor-intensive. After about 20 years, AI research funding stopped, and only the godfathers of AI—Yoshua Bengio, Geoffrey Hinton, and Yann LeCun—continued to work hard.

Later, Stanford professor Li Feifei established revolutionary computer vision, teaching computers to learn the functions of identification, detection, and classification that human vision can do. It is currently used in facial recognition, detection of lesions such as skin cancer, quality control in manufacturing, etc.1

ML is a human-operated feature extraction. After definition and classification in advance, summarized and simplified features will be proposed from a large amount of data and handed over to machine learning. The current development of Deep Learning (hereinafter referred to as DL) is that after training the neural network, the system can independently identify and make decisions, with the goal of ultimately eliminating the need for human participation.

However, as of now, even ChatGPT cannot be considered a complete DL and still requires human intervention.

AI’s current applications and hidden concerns

KRC: In what ways do discriminative AI and generative AI appear in daily life?

D:The difference between decision-making and generative AI lies in whether new content is generated. For example, when shopping online, e-commerce companies draw possible preferences and make recommendations based on search keywords, shopping history, etc. Some car insurance companies also use AI analysis to identify possible fake car accidents, hoping to prevent insurance fraud. Many airports already have Clear security systems that confirm identity through member information, fingerprints, and iris detection. These are all applications of decision-making AI.

Generative AI is a very new technology, and the most familiar one at present is chat bots such as GPT. After inputting data such as text, images, and sounds, the content in different forms such as text, images, sounds, and program codes will be generated. In the future, it is expected to have wider applications in marketing, coding, and even video generation.

For example, Bain Management Consulting combines OpenAI technology and cooperates with Coca-Cola to produce more personalized advertising copy. Coca-Cola also held a Create Real Magic competition, allowing artists to (re)create from the company's image files through GPT-4 and DALL-E (generating images from text prompts).2 In addition, through speech generation, the robot can talk to real people, which shows that there can be interaction between the generative AI and the user.

KRC: Now that AI has been widely used, what negative impacts may it have?

D:AI has an optimistic future, but there are still significant potential risks. Hinton, one of the godfathers of AI, resigned from Google in May 2023. He believes that AI may cause many people to lose their jobs, generate fake news, fake videos, etc., and warns: "It is useless to wait for AI to be smarter than us; it must be controlled as it develops. We must understand how to contain and avoid negative consequences. result."3

The negative impacts that have been seen or expected are as follows:

Illusion: Generated AI such as GPT does not have the ability to think logically. It only searches online information and gives the most likely reasonable answer. It may sound reasonable, but it may be wrong. The data currently processed by GPT reaches 2021, and the answers obtained will not include what happened after 2021. I once asked GPT why Hinton won the Turing Award in 2018 (for outstanding contributions to the computer industry, named after AM Turing, the founder of modern computer science)? GPT Answer: He did not win because his contribution was not high enough to win the award. This is the problem that generative AI currently needs to solve most: we don’t know the answer, but we won’t say we don’t know.

Bias: If the data processed by AI already has a biased image, the results will also be biased. For example, I gave DALL-E the word "scientist", and the images generated were mostly white people, and there was an Asian woman wearing big glasses.

Copyright: GPT generates answers from Internet search data, but these texts and images have original authors, and they may own copyrights. Do the answers generated by GPT infringe copyright? For example, Getty Images, the leader in the image and video trading industry, sued Stability AI, which uses artificial intelligence to generate images, for scanning and downloading tens of millions of images belonging to Getty without paying or seeking consent.

Plagiarism: GPT has a lot of knowledge, but it is not good at subjects that require analysis such as calculus. The education sector also needs to start studying how to use GPT and other tools to assist learning, but to prevent students from speculating and letting GPT write homework for them, or even lose their ability to analyze, think, and summarize. GPTZero is an application that helps find out how likely a job is to be generated by GPT.

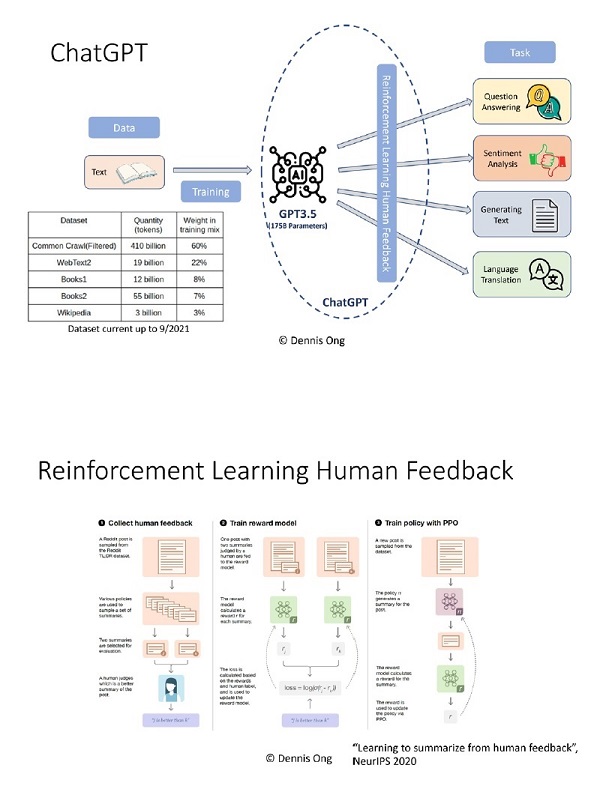

Little is known: How exactly does GPT generate answers? GPT-3.5 has 175 billion predefined parameters (parameters) used to control the machine learning process. Currently, we only know the theory, but the operating model is too large and we don’t know how to actually proceed. When companies developing AI build more capable tools, they may end up with products that cannot be fully understood, predicted, and controlled.

Fake news: Generative AI can produce unreal images (deepfake) and sounds (voice cloning), create false news, and even become a fraud tool to falsely claim that a relative has been kidnapped.

Many of these risks arise from human factors, such as bias, during the model design and development process. But there are new tools trying to reduce the negative impact. The key point is that we need to know more about the development and application of AI, and only then can we know the countermeasures.

KRC: How does generative AI (like ChatGPT) differ from conventional web search engines (like Google)?

D:The limitation of ChatGPT is that the information is only available until September 2021, and there is no new data. Secondly, the information sources have been screened. Although the amount is large, it does not have the ability to cover the entire network.

If you ask the original GPT how to make a bomb, you can get the steps. However, the answers generated by GPT3.5 go through "Reinforcement Learning from Human Feedback (RLHF)", which sets a bottom line to protect moral ethics.

How does RLHF work? Asked how to make a bomb, GPT produced two answers - it can be done and the steps are listed; making a bomb is illegal and cannot be done. Someone will decide which answer is better and give feedback. After getting feedback, train in reward mode. For example, now ask GPT3.5: Should I believe in Jesus? The answer would be: I can’t judge, but I can tell you why others believe in Jesus; and then list five reasons for believing in Jesus.

What kind of feedback people give needs to be determined in advance. But who decides the morals and values of principles? If the principles tend to be conservative, the feedback will be more conservative, and vice versa. Search engines do not have RLHF, but only list related websites by frequency. Users need to make their own efforts to judge and choose which (possibly more trustworthy) website to enter, read, absorb, and organize the information obtained.

Microsoft is currently launching New Bing, which combines the original search engine and GPT functions. It provides compiled answers and links to data sources, allowing users to decide whether to read further information. Another advantage of New Bing is that the data comes from real-time and all over the Internet.

The possible future of AI

KRC: A lot of news focuses on AI being used as a way for students and employees to exploit technology, and as a tool for criminals to create fraud. How to deal with the future of AI?

D:Response methods can be divided into the following aspects:

1. Technology. Develop and apply AI technology, such as GPTZero, to detect unreal images, sounds, and the possibility of plagiarism and plagiarism, and help quickly identify false information. This technology has considerable commercial potential.

2. Education and training. Improve the public’s understanding of AI and train the ability to distinguish between true and false information. Relevant courses can be offered in schools or workplaces.

3. Legislation and policies. Develop corresponding laws and policies to limit the improper use of AI technology while ensuring that public interests and personal privacy are protected. The EU's 2021 proposal is to divide AI applications into three categories: 1) Unacceptable risks, such as the government using social scoring to evaluate people's development opportunities, which need to be banned; 2) High-risk applications, such as CV (Curriculum) Vitae, course of life) Search job application resumes and rank applicants, which need to meet specific legal requirements; 3) Outside the above two categories, there are basically no special restrictions.4 Legislative restrictions are indeed necessary, and we must rush to catch up with technology that is developing too rapidly. However, rules that are too strict will restrict innovation.

4. Morals and values. Guide people to establish correct moral concepts and values, and understand that using AI to cheat or infringe on the interests of others is unethical. This helps create a healthy technology application atmosphere.

5. Multi-party cooperation. Governments, businesses, academics, and the public should work together to ensure the sustainable and responsible development of AI technology.

While understanding technology, people from different fields such as education, law, faith, etc. also need to participate in the forum.

KRC: Can AI replace human jobs? Can you replace people?

D:AI can certainly replace human jobs, but how much can it replace? Technology developers are always optimistic, but the actual situation often does not meet expectations. It is predicted in 2016 that millions of professional drivers will be replaced by autonomous vehicles. In 2022, the American Trucking Association (ATA) estimates that more than one million truck drivers will be needed in the next ten years.

Rather than saying that AI replaces people's jobs, it is better to say that it is currently a tool to help. Before accepting and using new tools, clear principles and rules need to be established to avoid breaking the law or going against ethics, such as copyright infringement.

Looking back at the several industrial revolutions that began with the steam engine, it took decades for each of them to be invented, developed, and widely used by the industry and consumers. And the emergence of new technologies also requires many supporting parts. AI and ChatGPT technologies are now available, but the issues mentioned above such as copyright have not yet been solved.

AI-guided automation can indeed replace human jobs, but equally, new professions are constantly emerging. I often encourage young students to have an open mind and think far ahead when choosing a major, and not to aim at the current hot industries. Instead, make contributions in all walks of life and use your God-given talents.

A fair approach to AI

KRC: How should Christians view AI?

D:"God created man in his own image." Technology can use the human brain as a model to create neural networks and develop powerful AI, but it is still not God's creation and does not have the noble glory bestowed by God. Even if the amount of knowledge far exceeds that of humans, and even if there is "Artificial General Intelligence" (AGI for short, which can express all human intelligent behaviors), GPT is still a finished product made by humans in a corrupt world, and there is corruption. problems in the world. No matter how well a "chatbot" can talk to people, it still has no soul behind it; no matter how reasonable the suggestions it gives, it still doesn't come from care. AI can solve many problems, but it cannot solve problems of the soul and heart.

Attitudes towards AI can be:

1. We must look at AI and other technologies from a correct perspective. Don’t be overly optimistic and regard technology as the answer to all problems, because believing too much in technology is also idolatry. There is no need to be pessimistic and see technology as a threat to human survival. In fact, AI is slowly developing, and it is our responsibility to uncover its possibilities.

Second, we need to ask what is the difference between humans and machines. The trend is to personify robots, which in turn reduces human characteristics. AI or GPT cannot replace human wisdom, care, and companionship. Although humans are also created beings, they have an image uniquely given by God, and machines cannot compare with it.

3. Use AI responsibly. Technology is not neutral; neither are computer algorithms and the data used to train AI. How to select data, how to adjust the model, and the principles and regulations based on decisions represent certain ethics and values. The result is that AI systems cause more unfairness, which has become a threat in fields that use big data, such as insurance, marketing, loans, police administration, and politics. Christians need to get involved and let AI incorporate justice, culture, care, social normalcy, stewardship, transparency, and trust in its development and application.

I would also like to encourage Christians who are knowledgeable and passionate about technology to use the resources God has given them to exert influence in the AI trend. The organization where my friend serves uses sermon content to train GPT. "Kingdom of God" magazine can also consider creating an internal GPT that contains all articles, so that when readers are looking for topics, they will not only get links to individual articles, but also compiled answers. Technology has its advantages and disadvantages, it all depends on how people use it.

In December 2021, the United Kingdom released Ameca, a "humanoid" robot. When asked how to make people happy, Ameca replied: "I can listen to you, give you advice and support; we can do things you like together; or be a positive, friendly companion in your life..."5

Aren’t these also necessary to maintain friendship and family ties? However, the relationship between people is complicated and requires more energy to maintain. It is much easier to make friends with robots. Can a relationship with a robot really replace a relationship with a real person?

The creator of Ameca could have made the entire robot very human-like, but he chose to retain the "machine" shape on the body because he did not want people to mistake the robot for a human. I am a "technology evangelist" and I introduce technology to everyone. I hope that more people can have a better understanding of the past development and current applications of AI and start more conversations about the prospects of AI. However, another evangelist thousands of years ago reminded people: "God has placed eternal life in the hearts of people" and said: "Remember the Lord who made you."

Perhaps this should also be the attitude towards any advanced technology.

1. For more about the history of AI development, please refer tohttps://www.tableau.com/data-insights/ai/history.

2. Regarding Coca-Cola Create Real Magic, please refer tohttps://www.coca-colacompany.com/media-center/coca-cola-invites-digital-artists-to-create-real-magic-using-new-ai-platform.

3. Hinton leaves Google,https://www.bbc.com/news/world-us-canada-65452940.

4. Regarding the EU proposal to regulate AI, please refer tohttps://artificialintelligenceact.eu/.

5. Ameca’s dialogue with people,https://www.youtube.com/watch?v=EWACmFLvpHE.

Dr. Wang Hao, worked at Amazon Cloud Technology and once led 5G application technology at Verizon. He is also a two-time TEDx speaker and regularly speaks around the world on 5G, artificial intelligence, autonomous vehicles, IoT and blockchain. Dr. Wang received an MBA with honors from the University of Chicago Booth School of Business and a PhD in electrical and computer engineering from The Ohio State University as a University Fellow. He and his wife Li Yitian (Timmy) have three adult children.